Why This Matters For Startups In The Project Economy

Dario Amodei from Anthropic recently explained on an interview with John Collison on the Cheeky Pint why his company's billion-dollar losses shouldn't worry shareholders or future investors.

Dario used an argumentative framework in which he reasoned that each new AI model version should be treated as its own business unit, arguing that while his overall company shows massive red ink, individual models actually generate positive returns.

In other words, Dario is saying that each new model development and launch until its (fast) obsolescence is a project. I like and invest in project markets, so it was worth digging into his world view.

Dario’s argument does have some interesting merits, and sounds at first reasonable, but let’s look deeper under the hood at what's happening with gross margins and moats across the AI industry to view it all from all sides.

tl;dr

Some AI companies disguise negative gross margins through accounting tricks

Dario’s pharma analogy breaks down without patents or switching costs

Airlines provide a framework for understanding AI economics

A perpetual arms race without moats (so far), and training customers to expect cheap excellence

Verdict: Is Dario right or wrong?

Some AI companies disguise negative gross margins through accounting tricks

Dario Amodei recently did an interview with John Collison where he tried to explain away Anthropic's massive losses. His pitch was essentially this: don't look at us as one company burning billions. Instead, think of each AI model as its own little business with separate P&L statements.

His numerical example painted a specific picture: invest $100 million training a model in 2023, generate $200 million revenue from that model in 2024. Meanwhile, you're simultaneously spending $1 billion on the next model that will produce $2 billion in 2025, followed by a $10 billion investment for the subsequent generation. The overall corporate P&L shows escalating losses ($100M, then $800M, then $8B), but Dario argued that each individual model generates positive returns when viewed in isolation.

Dario also mentioned that the payback periods on these models are quite attractive - suggesting nine to twelve month payback periods that would be "very easy to underwrite" in traditional business terms. He compared this to customer acquisition costs, arguing that businesses routinely invest in nine-month customer paybacks. Fair enough !

This framing might sidestep more fundamental issues about what happens at the transaction level. Some have been found with negative 30% gross margins even at hundreds of millions of annualized MRR scale, before considering any other costs like cloud hosting or customer support.

The math becomes murkier when examining the broader AI application ecosystem. Most successful AI applications downstream from the foundation model that Anthropic is function essentially as software wrappers around foundational models provided by Anthropic, OpenAI, or Google. These companies excel at building interfaces and specialized functionality, but their primary cost structure revolves around API calls to underlying AI models.

Some other companies have been seen to use several techniques to make their margins look healthier than reality. The Information has several investigative pieces on this. The most common approach by some of these other AI companies involves redefining what counts as revenue versus cost. Instead of showing true gross margins, these companies might calculate margins only on paying customers while treating free users as marketing expenses below the gross margin line.

I can also think about it this way: imagine an airline saying "don't worry about these non-paying passengers on my flights. If I only calculate the seats that are paid for, I'm actually making money per seat." But you've still got empty seats that burn fuel, add weight, and cost infrastructure money. Those costs don't disappear just because you moved them below the gross margin line.

Another technique some of these (downstream) AI players have been found to use is move legitimate direct costs below the gross margin line. Customer service costs, integration expenses with enterprise customers, and even core engineering resources might get categorized as operational expenses rather than cost of goods sold.

The fundamental challenge might not just be accounting practices. When businesses systematically destroy value at the unit level while attracting significant investment, it could signal capital allocation has become detached from basic economics. Unlike traditional SaaS companies that eventually prove unit economics work, downstream AI companies might face structural challenges that intensify with growth rather than improve through scale. Something to keep in mind.

Dario’s pharma analogy breaks down without patents or switching costs

During the interview, one comparison kept coming up: pharmaceutical companies. Dario reasons that AI model development resembles drug development - massive upfront R&D costs followed by profitable commercialization periods. The analogy initially seems compelling since both industries require enormous capital investments before generating any revenue.

John Collison, his interviewer, also noted an important distinction about cloud companies versus AI model development. Cloud companies like AWS make continuous CapEx investments, constantly adding new data centers in a steady, predictable pattern. AI models represent discrete generational leaps - more like how fighter jet manufacturers develop the F-16 and then work on completely new aircraft generations rather than their continuously upgrading the same platform.

But there might be fundamental differences that completely change the economic dynamics when compared to pharmaceuticals.

In pharma, when a company takes massive R&D risks to develop a blockbuster drug, they gain patent protection and regulatory barriers. Success essentially grants them a mini-monopoly for 10-20 years. That exclusivity period makes the "huge upfront burn, long payback" model economically viable.

With large language models, no equivalent protection exists. If Anthropic spends $1 billion (random number) training a new frontier model and achieves breakthrough performance, OpenAI or Google can release something comparable within six months. No patents protect the approach, no exclusivity exists, and users can switch with near-zero friction.

The pharma analogy might be more accurately stated this way: "I successfully developed this drug with massive R&D investment, but now I'm losing money on every pill I manufacture and distribute. Don't worry though, I'm developing an even better drug next year that will somehow fix everything."

That scenario would never fly in pharmaceuticals because once you've developed a successful drug, companies charge premium prices during their exclusivity period to recoup R&D costs and generate profits. They don't give pills away for free while hoping customers eventually decide to pay.

Consider how different this pharma model truly is from the airline industry. Airlines invest billions in aircraft fleets, then compete fiercely on similar routes with similar service offerings. They face constant pressure to upgrade equipment because competitors make similar investments. Even successful airlines operate on razor-thin margins despite significant capital requirements.

Real pharmaceutical companies enjoy monopoly pricing power during patent protection periods that AI companies simply cannot replicate. Without patents, regulatory barriers, or meaningful switching costs, AI companies might face commodity-like competition despite massive capital requirements.

Airlines provide a framework for understanding AI economics

The airline comparison might cut much deeper than surface-level similarities. Both industries require enormous upfront capital investments in assets that depreciate quickly. Both face pressure to constantly upgrade equipment to stay competitive. Both operate in markets where customers can easily switch between providers offering similar services.

But there's a crucial insight about customer behavior patterns that emerges from this comparison.

Airlines invest billions in aircraft fleets, then compete on routes where customers view services as largely commoditized. Despite significant capital requirements and operational complexity, airlines struggle to maintain pricing power because customers can easily compare options and switch based on price or schedule preferences.

AI companies might face remarkably similar dynamics. They invest billions training models, then compete for users who increasingly view AI capabilities as commoditized. Users can switch between ChatGPT, Claude, and other services with minimal friction. The switching costs that create moats in traditional SaaS businesses simply don't exist yet.

Airlines do maintain some moats through hub control and route networks. American Airlines' dominance at Dallas-Fort Worth or Delta's strength in Atlanta creates genuine competitive advantages. Frequent flyer programs also generate some customer stickiness, though less than in previous decades.

AI companies recognize this challenge and point to three potential moat categories they're trying to build. First, proprietary data flywheels through unique training sets and reinforcement learning signals that competitors can't replicate. Second, ecosystem lock-in via developer tools, API integrations, and deep enterprise contracts that make switching costly. Third, brand and trust positioning - Anthropic bets heavily on the "safety and alignment" angle to differentiate from competitors.

The problem is that none of these moats feel as defensible as traditional business advantages. Unlike Spotify, where users invest time building playlists and discovering content, or enterprise SaaS platforms where companies integrate workflows deeply into their operations, AI model switching costs remain minimal. Even enterprise contracts can be renegotiated when better models emerge at competitive prices.

The arms race dynamic becomes self-reinforcing due to what Dario calls "scaling laws" - the predictable relationship between model size, training compute, and performance improvements. Each company must continuously invest in larger, more capable models because standing still means falling behind competitively. But these investments don't create sustainable competitive advantages since competitors can match capabilities relatively quickly.

This pattern creates what economists call a "Red Queen Effect" - companies must run faster and faster just to maintain their competitive position. Alice discovered in Through the Looking Glass that in the Red Queen's country, you have to keep running to stay in the same place. The scaling laws ensure that this dynamic continues until either the economics break down or some fundamental limit is reached.

The customer behavior training aspect makes this particularly challenging. By offering advanced AI capabilities for free or at heavily subsidized prices, companies train users to expect cutting-edge technology without paying premium prices.

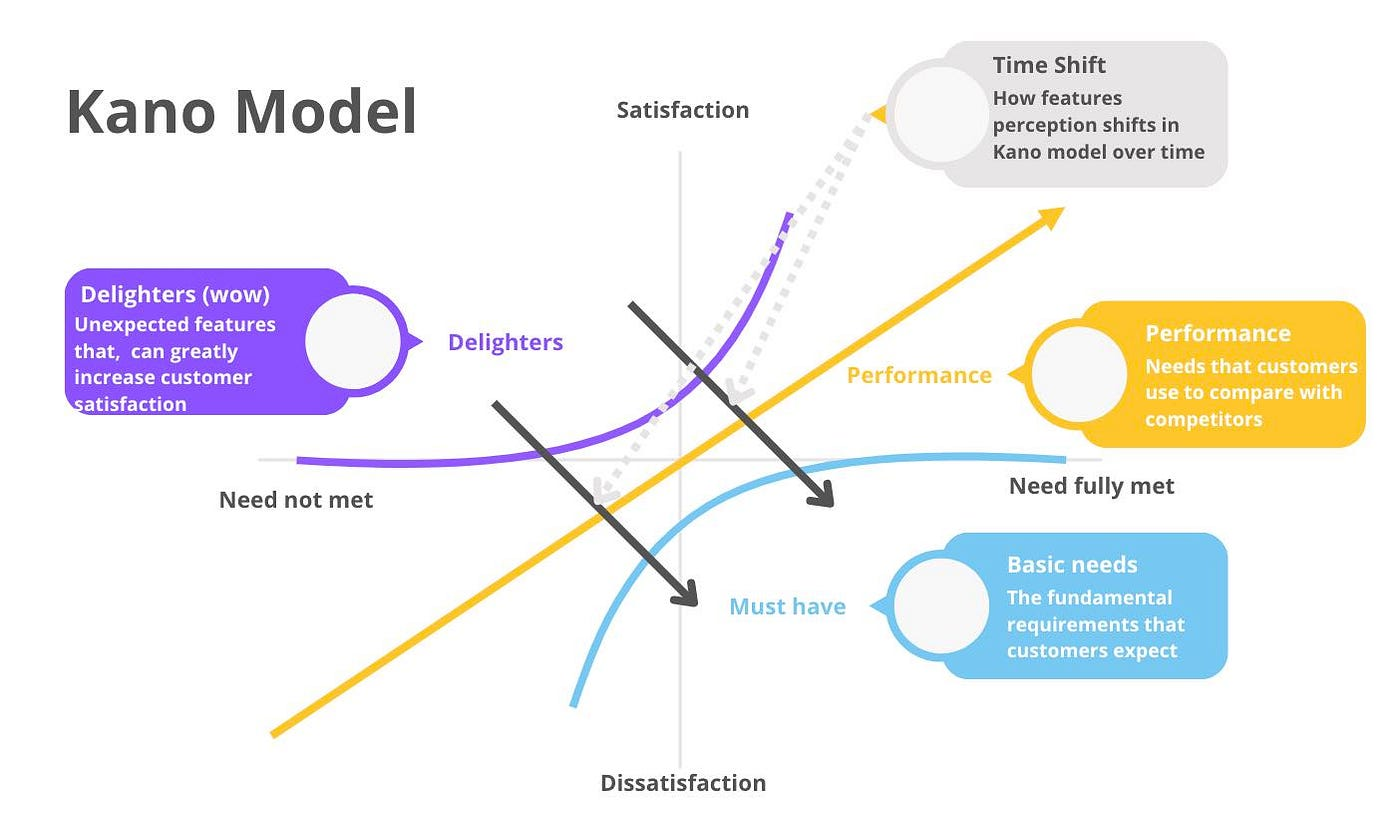

Think about the Kano model, where what was a "wow" feature last year becomes a basic expectation this year. If you have to compete on constantly deteriorating wow features that quickly become obsolete, and everyone has information symmetry about what competitors are working on because development cycles are so quick, you're training consumer behavior to expect great stuff for free.

It's like training airline customers that it's completely normal to get cheap or free business class seats from last year's fleet configuration. Next year you're upgrading to compete with five competitors who are flying upgraded first class and also giving away first class seats. Meanwhile, you still have free economy seats because you want customers to get familiar with your brand and aircraft.

This creates an environment where companies can say "don't worry, next year we'll become profitable because our customers will stay with us" - but that argument starts to feel less convincing when the entire competitive dynamic works against customer loyalty and sustainable pricing.

AI companies currently might operate more like airlines than pharmaceutical companies - high capital requirements, commoditized services, and limited pricing power despite significant operational complexity. The industry might need to develop genuine moats beyond model performance before unit economics can become sustainable at scale.

A perpetual arms race without moats (so far), and training customers to expect cheap excellence

There might be a fundamental problem with current AI company economics that extends beyond accounting tricks or temporary pricing strategies. The industry has possibly created an unsustainable dynamic where companies compete primarily on capital intensity rather than defensible value creation.

A potential framework for building sustainable AI businesses might require three elements. First, develop proprietary data advantages that competitors cannot easily replicate through pure capital investment. Second, create genuine switching costs through deep integration with customer workflows rather than just interface improvements. Third, focus on market segments where performance differences translate into measurable business value rather than general consumer applications where capabilities quickly become commoditized.

The companies that survive the next market correction could be those that solve specific business problems rather than trying to build general-purpose AI platforms. They might charge sustainable prices from day one rather than training customers to expect premium capabilities for free. They could build moats through data network effects, customer integration, or vertical specialization rather than just having the largest training budget.

For investors, this might suggest demanding proof of sustainable unit economics before considering massive valuations. For entrepreneurs, it could mean focusing on creating genuine customer value that justifies premium pricing rather than hoping scale will eventually solve fundamental margin problems.

The current AI boom might share more characteristics with airline industry dynamics than pharmaceutical innovation cycles. Until companies prove they can build sustainable moats beyond model performance, the industry might continue facing high capital intensity with limited pricing power.

Verdict: Is Dario right or wrong?

Correct: Foundation AI models are projects; their project profitability should be accounted

My personal viewpoint is that - in perfect isolation and ignoring various contextual assumptions you need to make - Dario does have a point when he argues that each model is a new R&D project that should be treated as its own project P&L.

We here at Foundamental invest in the Project Economy. We understand project cycles and profitability. Fun fact: 22-40% of the world economy is delivered through projects.

We know that projects (not just their companies) must be resource- and profitability-controlled:

- Terminal Value: A project by definition is finite. This stands in stark contrast to the general management assumption of “going concern” at a company level. So project accounting enables benchmarking across projects and underpins terminal value by showing each project’s standalone economics.

- Temporary Resource Allocation: Clarifies where capital, people, and time generate the best returns.

- Performance Of Resources Til and After Termination: A project-specific P&L makes profitability and execution quality transparent at the project level - where the resources are spent.

- Risk Containment: Isolates financial exposure so overruns in one project don’t distort the overall business.

By saying “each of our updated models is a project”, Dario is not wrong. Foundation AI models have a much nearer-term terminal end date than most software products, or real-world products.

So I can see grains of truth and merit in Dario’s argument. It's certainly a good way of thinking, as long as the premises are met...

Which brings me to these premises.

Flawed: His argument implies moats, pricing power and massively longer terminal value

The flaw in Dario’s framing is not in treating each new foundation model as a project.

It’s in assuming his model by model projects (will) operate with the same economic logic as businesses with defensible moats.

And this is where I disagree with his argumentation.

Pharma R&D projects lead to near-monopolies. That’s why you would not see a pharma company spend more billions on R&D after they determined there cannot be a patent for this drug.

Construction and real estate projects are natural monopolies. Each lot is unique, and by its very definition there cannot be a second one competing with this exact project.

Construction new upstream power plants, or transmission lines, lead to natural monopolies.

All of these require massive R&D or CapEx spend within a project framework. To Dario’s point about using the pharma analogy for foundation AI models.

But pharma companies can lean on patents, and even airlines at least leverage hubs and route dominance, and SaaS businesses create workflow lock-in.

These mechanisms extend terminal value far beyond the project’s build phase because they defend pricing power. Without them, terminal value collapses quickly once the project ends.

Which is exactly what we are seeing in foundation AI models so far.

In AI, the evidence so far suggests moats are thinner than Dario implies and assumes with his argument.

Model performance advantages erode in months, not years.

Switching costs are currently close to zero because APIs make competitors interchangeable. I speak from personal experience here, as we’ve designed our own AI routing and pipelines with redundancy and quick switchout in mind. So have many other sophisticated customers that I know.

And we are seeing how customers are expecting upgraded performance for cheap to no price extremely fast. The Shinji Kano model is a perfect guidepost to what’s really happening in foundation AI models in terms of fast-escalating customer expectations:

Brand positioning - whether it’s “safety” or “trust” - isn’t yet strong enough to justify significant premiums. And unlike airlines, AI companies don’t even have the geography-based choke points that can generate margin resilience.

The result: the project-level returns that look great in a spreadsheet are mostly theoretical if every competitor can replicate the same capability at the same or lower cost, and if customers have been trained to expect better and cheaper every quarter. Terminal value shortens, moats disappear, and the P&L math breaks.

It’s basically an arms race as of today, and I expect to hear more facts (or storytelling) where moats and pricing power will come from.

And that’s where Dario’s argument is falling very short, in my opinion. The industry doesn’t yet have structural features to sustain pricing power. Until AI companies can demonstrate real moats - whether through proprietary data, deep integration, or verticalization - the “each model is a profitable project” argument is purely a fantasy of accounting that ignores the immensely short terminal value, and lack of moats, that allow its parent company to actually harvest profits. Rather than the foundation of a durable business model.

Can these moats ever come for foundation AI or consumer wrappers? I don’t know. I am not convinced, but I truly don’t know and need to see more time play out to say ‘yes’ or ‘no’.

I am much more convinced for wrappers that target deep enterprise use cases with either data infrastructure/ontology, system of record, or another form of workflow ownership.

So for your project economy (AI) startup: This is where you want to be right !

Companies Mentioned

Anthropic: https://www.anthropic.com

Cursor: https://www.cursor.so

GitHub Copilot: https://github.com/features/copilot

Perplexity: https://www.perplexity.ai

Spotify: https://www.spotify.com

Netflix: https://www.netflix.com

Salesforce: https://www.salesforce.com

Patric Hellermann: https://www.linkedin.com/in/aecvc/

#AIEconomics #UnitEconomics #GrossMargin #AIBusinessModels #VentureCapital #TechAnalysis #StartupMetrics #BusinessStrategy